We are at the point where the most powerful AI reasoning models have the potential to open up important aspects of their black box. OpenAI's o3 model can solve previously impossible problems by "thinking step by step" in an internal chain of thought. But users tend to only be shown a sanitized summary of it. This post argues that this isn't simply a technical choice. It's a fundamental decision about whether AI systems should be interpretable to the people who depend on them and to external auditors needing to evaluate a model post-deployment.

-*-

“Thinking Out Loud”

We believe that a hidden [non-public] chain of thought presents a unique opportunity for monitoring models. Assuming it is faithful and legible, the hidden chain of thought allows us to "read the mind" of the model and understand its thought process.

- OpenAI, September 12 2024

The 2022 paper “Large Language Models are Zero-Shot Reasoners” by researchers at Google Brain and the University of Tokyo revolutionized more than just the field of prompt engineering. They found that adding the words “Let's think step by step” prior to a model’s answer improved scores by as much as 340% on math benchmarks. It turns out that giving a model time to scratch out its thoughts prior to answering — known as its chain of thought — greatly improves answers on hard problems. (This may be due to improved pattern recognition or genuine reasoning abilities, who knows.)

This paradigm has other advantages too. Importantly, chain of thought (CoT) holds the promise of making AI more interpretable. While an LLM contains a large amount of knowledge, it is nearly impossible to know how any specific answer was arrived at (especially when no external websites are visited). Assuming the model's thoughts are faithful to what's happening under the hood, chain of thought could provide a legible record of the model’s internal deliberation process. A user or third-party auditor could then inspect and verify this record, potentially protecting against misinformation, hallucinations, and misaligned behavior, to the extent that the behavior follows from the thoughts.

CoT quickly moved from being a purely prompting technique used during inference, to being baked into the model’s training. OpenAI’s o1 model — introduced in a paper with the ambitious title of “Evaluation of OpenAI o1: Opportunities and Challenges of AGI” — was perhaps the first to do this seriously. The model was trained to think longer prior to answering questions. It landed up completing previously impossible tasks, such as deciphering encrypted messages or solving difficult math problems. But there was one drawback to OpenAI’s newest model over the traditional CoT approach: the end user only received a summary of the CoT. And developers — who were even paying for every token of thinking — didn't even receive that summary.

Keeping CoT Hidden

OpenAI had two major incentives for obscuring the CoT:

1) They feared that disclosures would incentivize moderating the CoT during training, which could teach the model to suppress the ‘bad thoughts’ from being written out. This would defeat the point of looking at them in the first place. But the lack of moderation also meant that the CoT could contain unsafe material.

2) They wanted to maintain their competitive advantage over other model developers. They also wanted to protect their model from distillation through its CoT. This entails training a smaller (cheaper) student model by leveraging the reasoning capabilities of the larger, more powerful and costly, teacher model. DeepSeek explained this process in their infamous R1 model paper. OpenAI has since accused DeepSeek of distilling their models to create R1. And the less output model providers show to users, including the CoT, the more challenging it is for other model developers to ‘steal’ the reasoning for their own model.

Notably, DeepSeek then made the choice to fully expose the CoT from R1 to all users. This became a hugely popular feature. One that put pressure on Western model developers to make their CoT reasoning open. Some users found it helpful to see the “thought process” to be able to verify how the model arrived at its conclusion.

Trusting a Black Box? It depends which model you are using…

There are risks that come from hiding or obscuring the CoT; not to the model developers necessarily, but to the third-party API users and consumers relying on the output. Whether justified or not, LLMs are increasingly used in high stakes situations such as agentic software development, assisting lawyers, advising doctors and helping users make major “life decisions”. Their outputs pose real world consequences and risks beyond one company's reputation. As LLMs become more ubiquitous, the need for the consumer or firm to examine how a model arrived at a particular conclusion will only become more pressing. Anthropic put it best in a recent paper on interpretability:

We mostly treat AI models as a black box: something goes in and a response comes out, and it's not clear why the model gave that particular response instead of another. This makes it hard to trust that these models are safe: if we don't know how they work, how do we know they won't give harmful, biased, untruthful, or otherwise dangerous responses? How can we trust that they’ll be safe and reliable?

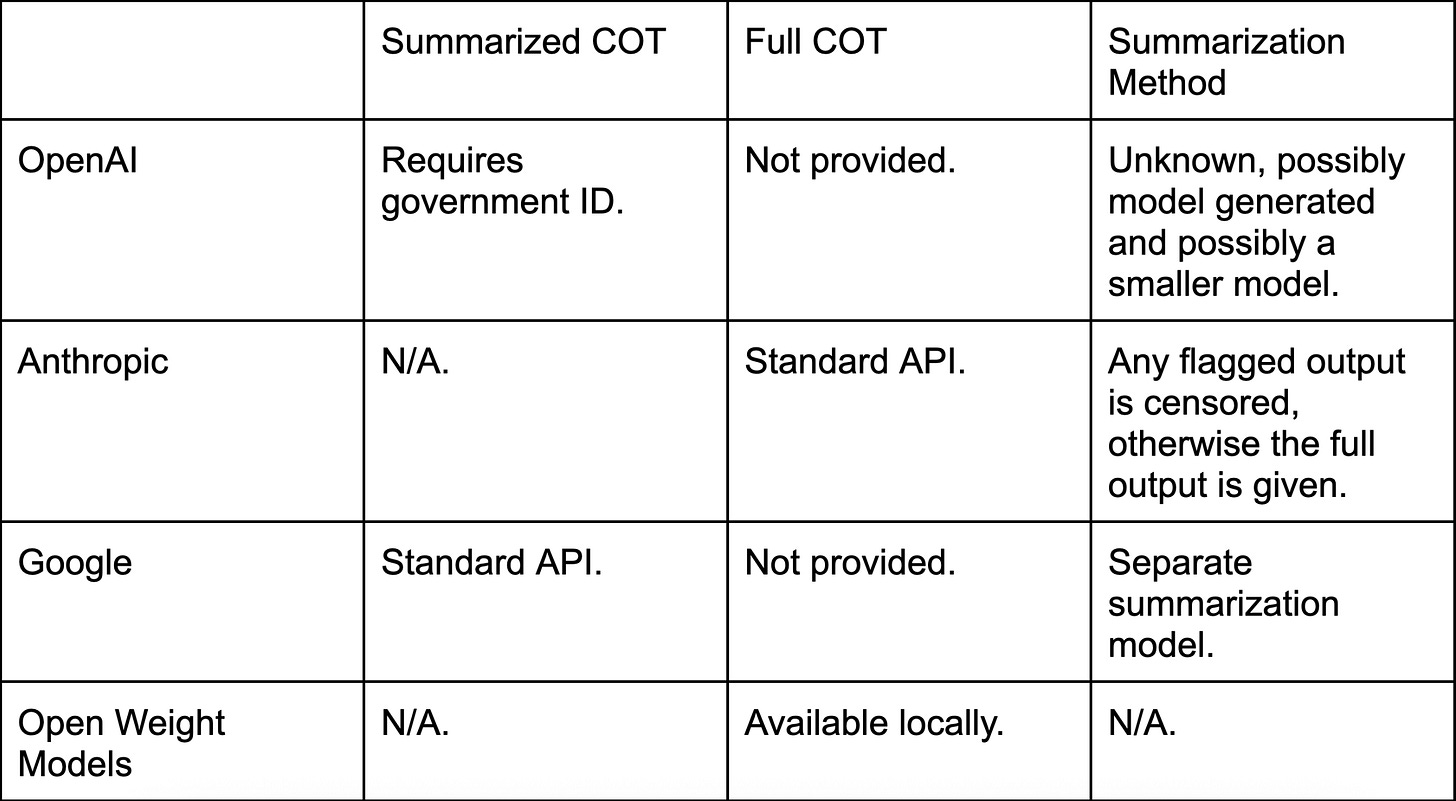

Disclosures on CoT thinking remain strikingly uneven though (Table 1 below). Anthropic, as a company with a stated mission to create safer, more transparent, AI — and with relatively more business users of its API — has the most transparent policy. It shows the full CoT reasoning. But OpenAI — the most popular consumer model provider — only provides a summary after a verification process. Yet no tools currently exist to assess how faithful the summaries are of the full CoT.

Table 1. Disclosure of model CoT by model developer

Third party developers, researchers, and users are already at a major disadvantage when it comes to model interpretability and monitoring. They only see the outputs that are provided to them and unless the model is open weights they never get to inspect the weights. This makes interpretability systems that analyze the activated weights, like Anthropic's circuit tracing tools and Gemma Scope, impossible to implement for anyone but the model developer.

Disclosures and Post-Deployment Monitoring

The decision whether to summarize an AI system's CoT — and how much information to obscure — has serious consequences for the entire AI ecosystem. It affects every user and third-party developer who relies on these systems.

CoT is not a panacea, but it could become an important tool for model interpretability and monitoring post-deployment — especially if it proves useful for predicting misaligned behavior or poor outputs. The key challenge is that we need more disclosure to make progress here. The more access researchers have to full CoT data, the better we can test whether internal reasoning actually predicts problematic outputs. Currently, third parties have few tools to assess how faithful model summaries are to the true CoT, much less how faithful the full chain of thought is to the model's actual reasoning process. Evidence on these critical questions remains mixed (here and here).

Graduated transparency frameworks are likely part of the solution. The path forward doesn't require full transparency like DeepSeek's R1 approach, but it does demand meaningful options. Enterprise users deploying AI in critical applications should have access to full reasoning traces. Researchers studying AI safety need sufficient data to validate interpretability methods. And regulators overseeing high-risk AI systems require auditable decision processes.

The current patchwork of disclosure policies cannot scale with AI's growing influence. As these systems become more powerful and ubiquitous, the case for structured transparency will only strengthen. One question is how to incentivize industry to lead this transition (or otherwise be compelled to follow it). Competitive market pressures should not be permitted to override the disclosure infrastructure that public trust demands.

Thanks for reading! If you liked this post subscribe now, if you aren’t yet a subscriber.