Weekly Roundup 2/12

Paris AI Action Summit, new safety frameworks, cheese hallucinations, and more

Text vs. subtext. The Paris AI Action Summit kicked off on Monday. The proposed goal was for countries to sign a (non-binding) “Statement on Inclusive and Sustainable Artificial Intelligence for People and the Planet.” Various critiques have been levelled at the final statement (“Pledge for a Trustworthy AI in the World of Work”), from it being vague, to it ignoring existential and national security risks. But the truth of the matter is that it seems like the U.S. currently did not want to be a part of anything multilateral, and the U.K. may not have liked the focus shifting to a broader range of safety issues — though they rightfully note the statement wasn’t particularly concrete. China, India, and Germany did sign the declaration along with around 55 others. But the text seems less important that the sub-text of the event. The subtext of the event was whether Europe could come up with viable AI alternatives to those driven by U.S. Big Tech, avoiding what is an increasingly acrimonious and resentful reliance by the EU on U.S. technology companies.

The major announcements of the event were not about safety, but about distinctions between European and U.S. industrial policy — we believe this was the true symbolism of the occasion. U.S. Vice-President J.D. Vance said in a fairly confrontational speech that U.S. AI policy is U.S. industrial policy, while Europe announced capital raising and joint-ventures to support European AI development. It is true that Europe’s current AI champions, Mistral and Hugging Face, along with Meta Chief AI scientist Yann LeCun, are all focused on the idea of open source AI. So to the extent that Europe builds its AI stack on an open ecosystem, it might be that investments into Europe’s AI ecosystem concurrently make it safer and more competitive. Time will tell.

U.S. AI Safety Institute (AISI) in flux. The future of the U.S. AISI — and AI regulations more generally — is unclear. President Trump repealed Biden’s AI Executive Order, replacing it with his own. It’s no surprise then that Elizabeth Kelly, the head of the U.S. AI Safety Institute, has left (with no replacement announced). Trump’s new AI executive order is industrial policy centric, fused with a broadening national agenda (echoing Google’s statement below on its changing AI principles), stating: “It is the policy of the United States to sustain and enhance America’s global AI dominance in order to promote human flourishing, economic competitiveness, and national security.” A call for outside comment on the Executive Order has been announced in order to develop an AI Action Plan. The Executive Order could be read, at least in part, as an extension of the heightened restrictions put into place in the final weeks of the Biden administration on access to GPU chip purchases and rentals — not just for Chinese firms, but also for companies in ordinarily friendly trading partner countries. (SemiAnalysis for the deep dive as always.)

New safety frameworks from Meta and xAI: old wine, new bottles? Both Meta and xAI have released updated and new (respectively) safety frameworks in the past few days. These frameworks follow the same responsible scaling policy playbook as established by METR. Meta’s policy introduces the idea of basing risk thresholds on a set of catastrophic outcomes by first determining the unwanted outcome and then finding all the paths to that point. This gives them a more concrete and defensible set of thresholds, but they are still only choosing to evaluate CBRN and cybersecurity risks. While these are genuine risks to be concerned about, they are a small subset of possible harmful outcomes from this technology as it permeates all aspects of society and economy.

xAI’s framework has the same limitations, but it is commendably specific about which benchmarks the company is using to evaluate their models. This makes outside auditing easier. xAI also includes a section on agent safety, suggesting they are developing a method to identify AI agents, possibly through HTTP headers. No other safety framework from a leading developer deals with agentic safety, even as agentic capabilities are being rolled out. Safety frameworks can be a useful policy instrument but are nowhere near the point of helping to enumerate all relevant AI risks, existential or otherwise.

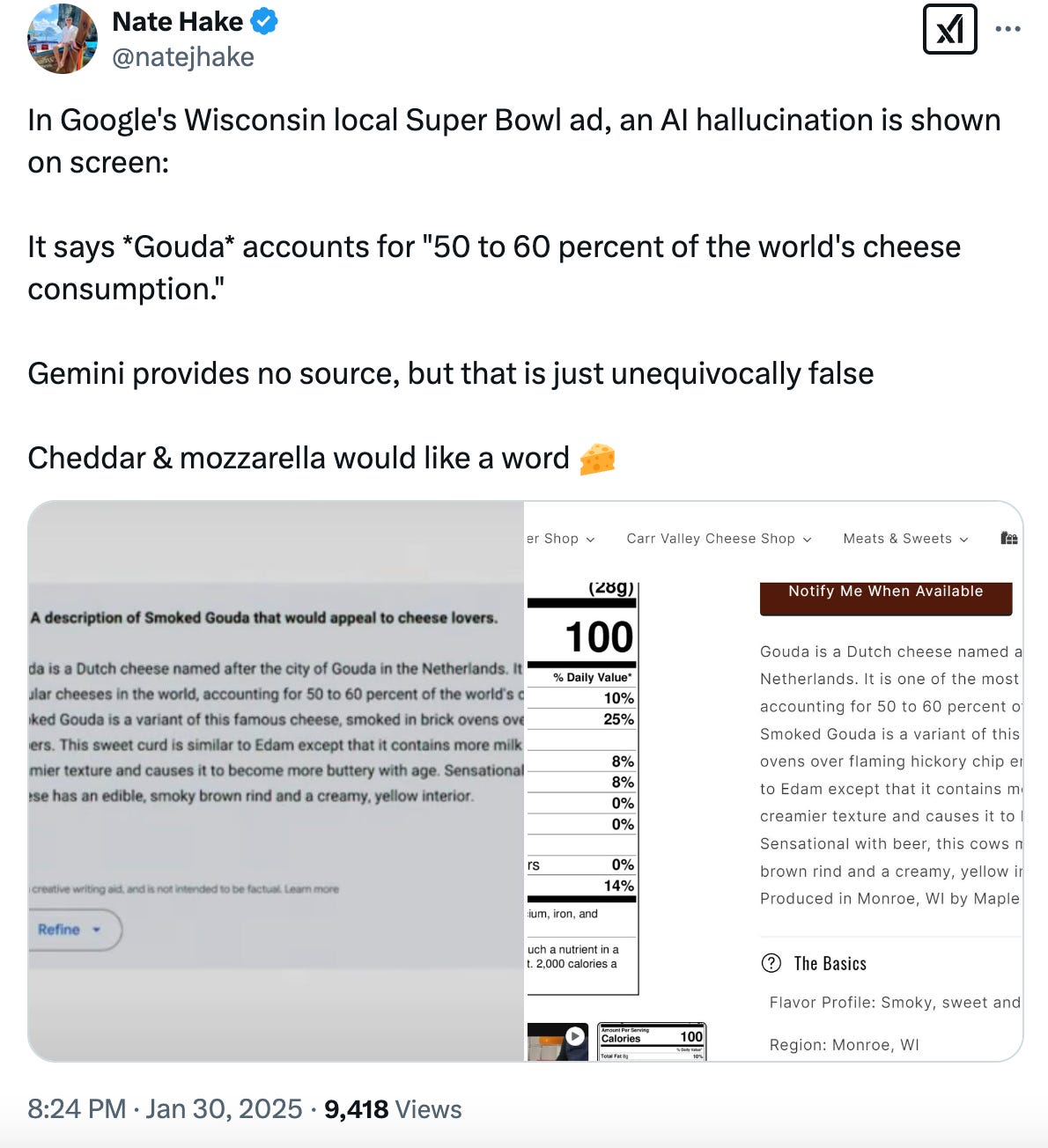

Even with high public stakes, industry can’t catch AI bluffs. A Google ad promoting Gemini’s advertising capabilities was released last week featuring an embarrassing AI hallucination. The now edited advertisement shows its Gemini AI model generating copy for a cheesemonger that confidently states that Gouda cheese accounts for “50 to 60 percent of global cheese consumption.” Somehow, this blatantly false “fact” made it all the way out into the final cut, and was only caught by a tweet. This blunder underscores the challenges of catching hallucinations, even by huge companies with a lot at stake on promoting the reliability of AI.

Although LLM capabilities are accelerating rapidly, it’s important to remember they still mess up. That is clear if you read a startling new BBC report noting that around 50% of AI chatbot answers involving BBC articles were badly wrong or misleading.

Undoing the original sin? Governments looking to encourage the rapid development of frontier AI face the challenge of giving AI systems access to vast datasets while somehow figuring out how to ensure that creators are paid fairly. The real question is how to structure AI monetization so that those who create the content can also profit. A UK Parliamentary committee recently heard from professional creators and publishers concerned about AI developers scraping copyrighted material for training. Right now, the system favors developers who build on top of AI models, as well as the model providers themselves — both can generate significant revenue (though not necessarily profits). Creators have far fewer ways to monetize.

However, banning scraping or mandating public disclosure of training data isn’t the only way to ensure fair compensation. A private auditing body could verify what materials were used in training. This approach supports a market-based rather than a government-driven solution: if companies were required to submit their training data to an auditor, and creators could check whether their work was used, it would spur new business models that pay creators fairly for their content.

From military-industrial to military-tech complex? The U.S. military-industrial complex, while based on long-standing industrial alliances, is under commercial attack from Anthropic, Google, AWS, Palantir and others who are trying to win business from the old industrial incumbents. Take exhibit A: Google unveiled a new safety framework this week, detailing its ongoing work on responsible AI development in a blog post. Conspicuously missing was the old provision prohibiting the development of AI applications “likely to cause harm”, including military weapons and surveillance. Andrew Ng thinks that critics of this change are misguided, while Parmy Olson at Bloomberg calls it dangerous. But it’s best considered in light of Anthropic and OpenAI effectively doing the same thing. Anthropic CEO Dario Amodei’s recent op-ed in the WSJ reads as a public application to become a vendor for the U.S. military. Exhibit B is Anduril and Microsoft, who were just awarded their first major joint military contract (to our knowledge) to develop and produce for the Integrated Visual Augmentation System (IVAS) program. The AI race is clearly then also a race to monetize AI, including by suppling the inputs for the national competition between China and the U.S. And to quote a previous roundup, “As military use of AI is prioritized, we can expect lighter U.S. government regulation on AI — or at least a growing bifurcation in the regulatory environment for AI’s dual uses (military vs. civilian).”

“There’s a global competition taking place for AI leadership within an increasingly complex geopolitical landscape. We believe democracies should lead in AI development, guided by core values like freedom, equality, and respect for human rights. And we believe that companies, governments, and organizations sharing these values should work together to create AI that protects people, promotes global growth, and supports national security.” - Google, Responsible AI 2024

Thanks for reading! If you liked this post please share it and click “subscribe now”, if you aren’t yet a subscriber.