Weekly Roundup (February 19, 2025)

OpenAI gets erotic, UK AI safety institute gets a rebrand, and more.

UK AI safety gets a rebrand. The UK AI Safety Institute got a makeover last Friday, switching the “S” from safety to security. From the press release: “This new name will reflect its focus on serious AI risks with security implications… It will not focus on bias or freedom of speech, but on advancing our understanding of the most serious risks posed by the technology.”

The name change, along with its new mandate, appears to be rooted in a broader desire by the UK government to more closely align with U.S. policy and its deregulatory agenda, perhaps in the hope of attracting more foreign capital. The Institute’s press release explicitly notes that its intention is to “unleash economic growth that will put more money in people’s pocket” and “turbocharge productivity”. The Financial Times (FT) reports that “the UK’s new ambassador to the US, Peter Mandelson, said his ‘signature policy’ would be fostering collaboration between the two countries’ tech sectors, to ensure both countries could secure a ‘logical advantage’ over China.” The regulatory AI race has begun - with existing incentives suggesting it will be a race to the bottom.

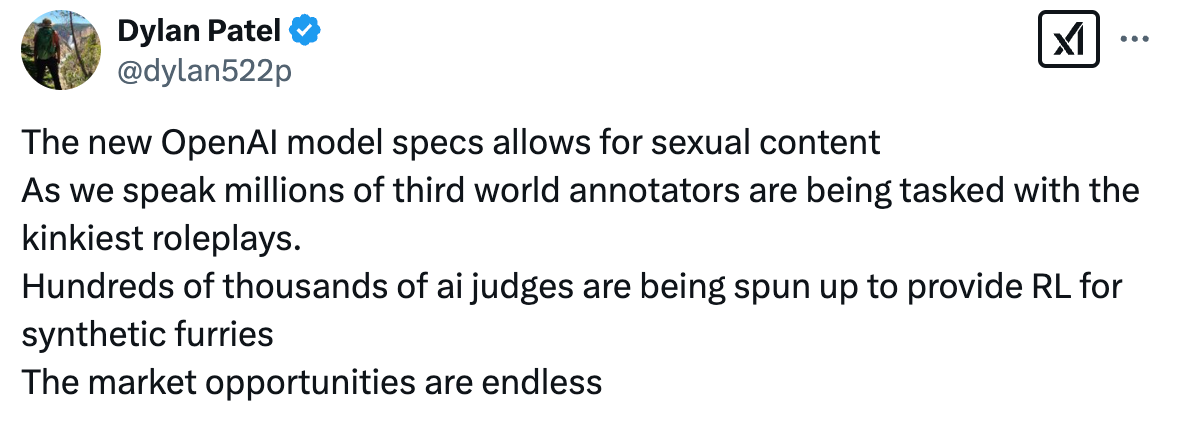

OpenAI opens the door to AI erotica. OpenAI released a significantly updated and expanded “model spec” last week. The model spec “outlines the intended behavior for the models that power OpenAI's products, including the API platform” — basically a constitution on how exactly models should behave. Along with clarifying and adding to the previous document, they updated their policy on sexual content, stating that: “to maximize freedom for our users, only sexual content involving minors is considered prohibited.” In fact, that’s the only content that has a blanket ban in the new spec. The model is designed to be more helpful in general, which means answering users when they choose to go into dodgy topics, including asking questions about potentially illegal activities.

This change follows the trend of developers relaxing their restrictions on model usage. (Google’s update to allow military use was noted in last week’s roundup). It’s not surprising that developers are easing usage restrictions as they compete for market share and users & developers clamor for greater flexibility. One other outcome of this change may be to enhance the extent of personalization between model and user conversations - just like today’s recommendation algorithms claim to do. Overall, OpenAI’s new model spec is a detailed, positive, example of disclosing openly the developer policies used to steer model behaviour. All the same, it highlights how companies’ purported “safety values” are extremely malleable and not necessarily just about safety.

“AI Redteaming is Bullshit.” The organizers of DEF CON released a collection of their findings from last summer’s gathering, with a revealing section on redteaming. Coming from a broader cybersecurity background, the DEF CON document argues that more general software (and hardware) security methods like Common Vulnerabilities and Exposures (a database of publicly known information-security vulnerabilities) and Vulnerability Disclosure Programs (a company specific method for users to disclose bugs) can be repurposed and expanded to coordinate AI redteaming and safety. They also argue that redteaming is currently ineffective and would benefit from detailed documentation — a proper model card, which includes the intended downstream usage of the model. Without proper disclosures on intended model use cases, they note, the area of potential attacks to test for is simply too vast. This is in line with our belief that considering model safety alone is insufficient. Unless you consider deployment – how models are being used downstream and by whom – all you are doing is considering the capability to do harm, but not putting in place sufficient mechanisms to detect or counter it.

DOGE pushes for AI to replace workers. As the Trump administration fires thousands of federal workers, the New York Times (NYT) reports that Musk’s next target is the Department of Education. The department currently contracts a call center which fields over 15,000 questions per day about government provided student loans. DOGE is looking to replace the call center with an LLM-powered chatbot that can hypothetically do the work of 1,000+ people. This sort of service work could benefit immensely from AI assistants, providing personalised, timely, and accurate advice. The question remains how to keep humans in the loop to ensure the model is actually able to do this. This requires proper auditing and disclosure guidelines to ensure models behave as intended. Otherwise you could come up with something like NYC’s failed bot that told employers it was OK for bosses to take workers’ tips.

In other words, Musk’s taste for innovation needs to incorporate some safeguards so that we can assess if the technology is in fact making us better off. (See Gary Marcus on the failings of Musk’s vision for AI.)

Thanks for reading! If you liked this post please share it and click “Subscribe Now” if you aren’t yet a subscriber.