Weekly Roundup (March 5, 2025)

AI's manipulation problem, OpenAI has got Meta's back, the latest in Google's enshittification, and more!

“The AI manipulation problem”. Louis Rosenberg, CEO of Unanimous AI, highlights a critical vulnerability in human-AI interactions, where conversational AI may already be able to decode human personalities to optimize for persuasion in real-time, responding instantly to consumer cues and super-powering targeted advertising. This represents a fundamental shift from social media’s targeted content, as "buckshot fired in your general direction", to "heat-seeking missiles" personalized to each individual's psychological profile. Models are only getting more persuasive (newly released GPT 4.5 achieves “state-of-the-art performance” on admittedly uninformative machine-to-machine persuasion evals), yet regulatory safeguards addressing this manipulation risk remain underdeveloped. AI applications that manipulate human behavior are prohibited under the EU AI Act. But how to define this? And would the EU be prepared to effectively limit monetization rates on major platforms, by intentionally degrading the efficacy of their advertising and recommendation algorithms?

Rosenberg's proposed remedies — mandatory disclosure of AI’s objectives, prohibition of AI having an optimization loop for persuasiveness, and limits on AI using personal data for manipulation — focus on preserving what he calls a user’s epistemic agency: “an individual’s control over his or her own personal beliefs”. But most users don’t have fixed, god-given (“exogenous”), preferences (in economics speak). Much online user behaviour is intentionally explorative - to learn, and even form new preferences. The issue ultimately is, as Rosenberg notes, how to ensure algorithms work for users and not against them. The most effective way so far to dull users’ conscious decision making — thereby ensuring a company’s algorithms maxamize for a narrow suite of corporate objectives — has been through highly addictive, dopamine inducing, feeds on social media. AI is already helping fuel higher monetization rates on Facebook and YouTube. Yet we don’t know whether these changes are working for or against users. Existing internet platforms are among the largest adopters of new AI technologies. So if we want insight into AI’s emerging impacts, we think that it make sense that we should start with enhanced algorithmic disclosures required for these platforms.ChatGPT catches LlaMa misuse. OpenAI's latest (February 2025) threat report demonstrates how AI labs can serve as crucial early warning systems for malicious activity online. The report details how Chinese actors used Meta’s open-source LlaMa model to write code for a sophisticated surveillance operation (dubbed "Peer Review"), while also using OpenAI’s ChatGPT model to debug LlaMa’s code. It’s easy to label Meta’s open-source AI model Llama as the problem here. But the real point, we think, is that the Chinese actors were using OpenAI instead of DeepSeek to do their code debugging. Why? We can’t be sure, but it could be because of the efficacy of America’s chip ban, which really binds at the level of model inference, notes Dylan Patel, limiting AI’s adoption and use. In using OpenAI’s model, Chinese actors are really using American compute remotely.

Important questions also remain about the technical infrastructure preventing this sort of malicious use detection. Unlike Anthropic’s Clio (a post-deployment auditing system), OpenAI has provided almost no details about their monitoring and auditing systems, creating a blind spot in understanding how similar malicious use attempts can be detected by AI companies in the future, especially as adversaries adapt their tactics to avoid detection in future operations.

Google’s enshittification — a bad omen? A new study from The Verge and Vox Media provides evidence in support of Tim O'Reilly’s thesis on tech companies' transition from innovation to extraction in their platforms and algorithms. The survey found that 42% of respondents believed Google Search and similar search engines are becoming less useful, while 52% report turning to AI chatbots or alternative platforms like TikTok instead of Google for information needs. 66% said the quality of information in Google Search is deteriorating, making it difficult to find reliable sources.

This looming user exodus perfectly illustrates Tim’s "robber baron rents" thesis. Google Search initially dominated by using algorithms to extract signal from massive data volumes (websites), creating value by allocating attention efficiently and then benefiting in turn from a “rising tide” rent. However, as market saturation occurred, growth slowed, and Google pivoted from user-centric innovation to revenue extraction through increased advertising placement. The once-complementary ads that ran alongside organic results became substitutes, that pushed highr quality organic results further down the page in order for Google to extract more attention revenue from users and advertisers: a “robber baron” rent.

With generative AI, we could finally be witnessing the market reaction to Google’s enshittification. Google’s extractive behavior with Search has created an opportunity for disruptive technologies and community-based alternatives (Reddit) offering higher quality sources of information — precisely what the Verge's research found users are increasingly seeking. 61% of Gen Z use AI tools instead of Google or other traditional search engines. The question remains whether today's AI innovators will eventually succumb to the same profit-maximizing tendencies when their growth inevitably slows. Already, Google’s AI overviews that appear in many search results could be sending more traffic to their own company, YouTube. Alphabet’s shareholder report notes though that AI summaries in search results are monetized at a similar rate to ordinary search results. Without learning from our past (and present!) regulatory failures, we’re doomed to repeat the cycle, with users paying the price.

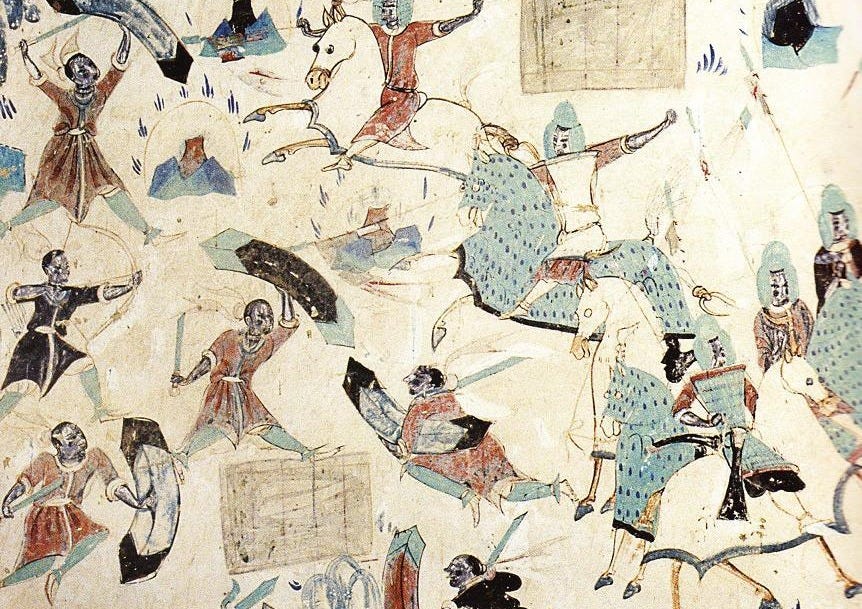

Note: A depiction of the Avadana story of the Five Hundred Robbers showing soldiers wearing medieval Chinese armour, from Mogao Cave 285, Dunhuang, Gansu, China. (Source: Via Axb3 on Wikimedia Commons)

Business trust in AI reaches an inflection point. Two significant developments signal a shift in how heavily-regulated industries are integrating AI. In China, the Financial Times reports DeepSeek's R1 model has catalyzed "lightning adoption" across state institutions since January 2025, with traditionally cautious entities like hospitals, government agencies, and state-owned enterprises rapidly implementing the technology. Concurrently, The Information reports that Danish pharmaceutical giant Novo Nordisk has cleverly integrated Anthropic's Claude 3.5 Sonnet, using RAG and other techniques, into regulated document workflows, dramatically reducing their clinical document creation time from 15 weeks to just 10 minutes. These parallel developments across different market contexts indicates a crucial transition in generative AI’s adoption, even as the technology’s efficacy remains in its very early stages.

The Chinese deployment of DeepSeek also carries significant geopolitical dimensions. President Xi personally met with DeepSeek's founder alongside established business leaders like Jack Ma, signalling the technology's strategic importance to Beijing at this time. The government’s endorsement helps to create a technological ecosystem that naturally advantages domestic AI development, while potentially locking out Western competition. By contrast, Novo Nordisk — a European pharmaceutical company operating in one of the world's most stringently regulated industries — has voluntarily adopted a closed-source American AI model for sensitive regulatory documentation.

These cases highlight divergent adoption models: China's more coordinated national strategy versus the purely market-driven, VC-driven, American approach. An emerging question is whether the innovation advantages of a more open American system can maintain competitive momentum against state-backed alternatives, optimized for rapid, coordinated deployment across strategic sectors.Model risks vs API risks. Sam Schillace's recent essay on our tendency to anthropomorphize AI systems highlights a critical disconnect in how we conceptualize AI security and governance. His assertion that "it's not your friend, it's an API", highlights that AI risks are not just about the model but how the model is accessed and integrated into software architectures online, in this case via APIs. There is a fundamental tension between how to design AI systems to be secure versus how we talk about AI security. Technical frameworks for AI model alignment often focus on a model’s narrow capabilities (such as preventing the model from saying harmful things), while ignoring the broader architecture of how these systems actually operate in production environments.

Consider his observation about information security:

"Back in the land of an API call, we understand that if we pass a piece of information to a third-party API, we have no idea at all what happens to it - it's now public, in the wind. And yet, many ‘agent’ designs and other products I see seem to have implicit assumptions that if we somehow ‘convince’ the LLM to be trustworthy, we can trust it with secrets, like a person."

We trick ourselves into believing that we can establish "trust" with a model through clever prompting or system messages, when in reality we're sending data to a computational black box owned by another entity. This isn't merely a semantic concern — it should directly shape the risk models and regulatory frameworks needed to align business incentives with secure AI model deployments.

What's required isn't just better technical model alignment, but a reorientation of how we integrate AI systems in regulatory frameworks —seeing them not as quasi-sentient partners, but as powerful architectures to be secured through careful systems engineering, involving product lifecycle engineering techniques with continuous feedback loops. What do you think? We’d love to hear from you.

Thanks for reading! If you liked this post please share it and click “subscribe now”, if you aren’t yet a subscriber. And please share this with others as we look to expand our reach.