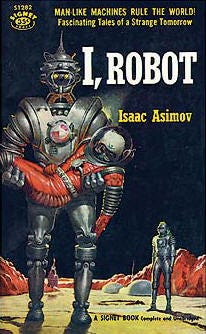

Welcome to Asimov's Addendum

The three laws of robotics were the beginning of the story, not the end...

Back in the 1940s, Isaac Asimov imagined a society made up of humans and intelligent robots. “The Three Laws of Robotics” guided the safe and ethical behavior of robots and gave Asimov ample opportunity to explore the many ambiguities in the laws and the practical difficulties of implementing them. All of these conflicts were successfully resolved in fiction, and Asimov seemed to think that they might indeed be sufficient to guide robot-human interaction:

“The First Law of Robotics: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

The Second Law: A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

The Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.”

The science fiction of the succeeding decades gave us far darker visions. Monomaniacal, homicidal robots and artificial intelligences became the norm, replacing Asimov’s vision of intelligent machines as contributing members of a human-robot society. Works such as 2001: A Space Odyssey and James Cameron’s Terminator movies made the case that artificial intelligences would prefer their own objectives over ours. If robots became our superiors, they might well treat us the way we treat animals. At best, we might become the equivalent of pets or a resource to be exploited; more likely, we would be wiped out.

Stuart Russell, a UC Berkeley professor and author of the leading AI textbook, set out to counter these risks. He proposed to develop “Human-compatible AI” by following a very different set of three principles:

1. The robot’s only objective is to maximize the realization of human values.

2. The robot is initially uncertain about what those values are.

3. Human behaviour provides information about human values.

In his 2017 TED talk, Russell elaborated:

“I’m trying to redefine AI to get away from this classical notion of machines that intelligently pursue objectives. There are three principles involved. The first one is a principle of altruism, if you like, that the robot’s only objective is to maximize the realization of human objectives, of human values. And by values here I don’t mean touchy-feely, goody-goody values. I just mean whatever it is that the human would prefer their life to be like. And so this actually violates Asimov’s law that the robot has to protect its own existence. It has no interest in preserving its existence whatsoever.

The second law is a law of humility, if you like. And this turns out to be really important to make robots safe. It says that the robot does not know what those human values are, so it has to maximize them, but it doesn’t know what they are. And that avoids this problem of single-minded pursuit of an objective. This uncertainty turns out to be crucial.

Now, in order to be useful to us, it has to have some idea of what we want. It obtains that information primarily by observation of human choices, so our own choices reveal information about what it is that we prefer our lives to be like.”

This discussion, traditionally called Artificial Intelligence “alignment”, tackles the vexing questions needed to make AI machines fulfill human preferences. AIs must maximize the realization of human values, but the question remains: whose values? Their corporate owner’s? Some favored group whose values were used to train the artificial intelligence? What happens when the robots realize humans don’t all have the same objectives? How do we rank pluralistic human values?

Like Asimov’s Three Laws of Robotics, the tale grows in the telling. Russell’s emphasis on algorithmic alignment, while imperative to ensure that machines can do our bidding, is no longer sufficient to ensure that they actually do so. AI technologies are now embedded into products for sale, and so subject to an additional layer of potentially conflicting economic incentives from their corporate owners. This makes institutional alignment imperative to AI’s safe, equitable, and transparent development. We know this lesson from the recent past.

Big Tech’s product development does not always align with consumer welfare but instead with the pursuit of market share or profit. Social media platforms chose to optimize their algorithmic selection of content for engagement – even at the cost of societal well-being. Platforms got users addicted to their smartphones by technological design. Hoovering up our data, and eventually using it against us wasn’t a bug, it was “a feature” designed to maximize our engagement with advertising. Why should we expect it to be any different this time around as AI products evolve? “Move fast and break things” and “enshittification” are very real AI risks.

In response, you might quote Adam Smith – arguably the original theorist of alignment problems in a market economy. Smith noted that it is precisely when individuals (as businesses) pursue their private commercial interests that the public interest is maximized; in other words, alignment is perfect so long as trade is free:

[Businesses] are led by an invisible hand to make nearly the same distribution of the necessaries of life, which would have been made, had the earth been divided into equal portions among all its inhabitants

Yet, despite Smith’s overarching skepticism of government intervening in the economy (given this natural alignment of commerce with societal interest), even he saw many instances where interests would deviate. This included when governments need to step in to foster new “infant” industries, limit the exploitation of labor, regulate financial institutions, and ensure businesses do not harm consumers through collusive or monopolistic behavior.

The premise of this newsletter is that market incentives will significantly influence the direction of AI’s development. Without corporate guardrails and data disclosures to maintain institutional alignment, AI’s “commercialization risks” could quickly take off and spiral out of control. The other side of risk, of course, is the mechanisms we put in place to manage it. What are the best practices that the developers/deployers of commercial AI ought to be following? We have the example of many other high risk technologies that have been made a productive part of our economy.

Welcome to the world of Asimov’s Addendum: a space where we intend to reflect broadly on the alignment problem, how disclosure might enable better regulation of AI, and generally consider the next stage of the hybrid machine-human society coming into view. Our perspective, while sometimes theoretical, is guided by practical concerns to ensure that AI’s impact is as broadly beneficial and safe as possible. There is a great deal of powerful work going on in this area. We will report on what we find to be most useful, and seek to notice the gaps. As it turns out, Asimov's Laws of Robotics were the beginning of a conversation that we expect to continue for a very long time.

– Tim O’Reilly and Ilan Strauss

AI Disclaimer: GPT 4o was used for light final editing and image generation.