How good are LLMs at tricking you? (Weekly Roundup)

(very)

Happy Wednesday! We hope you had a restful Memorial Day weekend. This week’s roundup covers new findings on LLM persuasion, the challenges of testing model behavior, a wave of product announcements from top AI companies, and VP JD Vance’s stance on AI regulation.

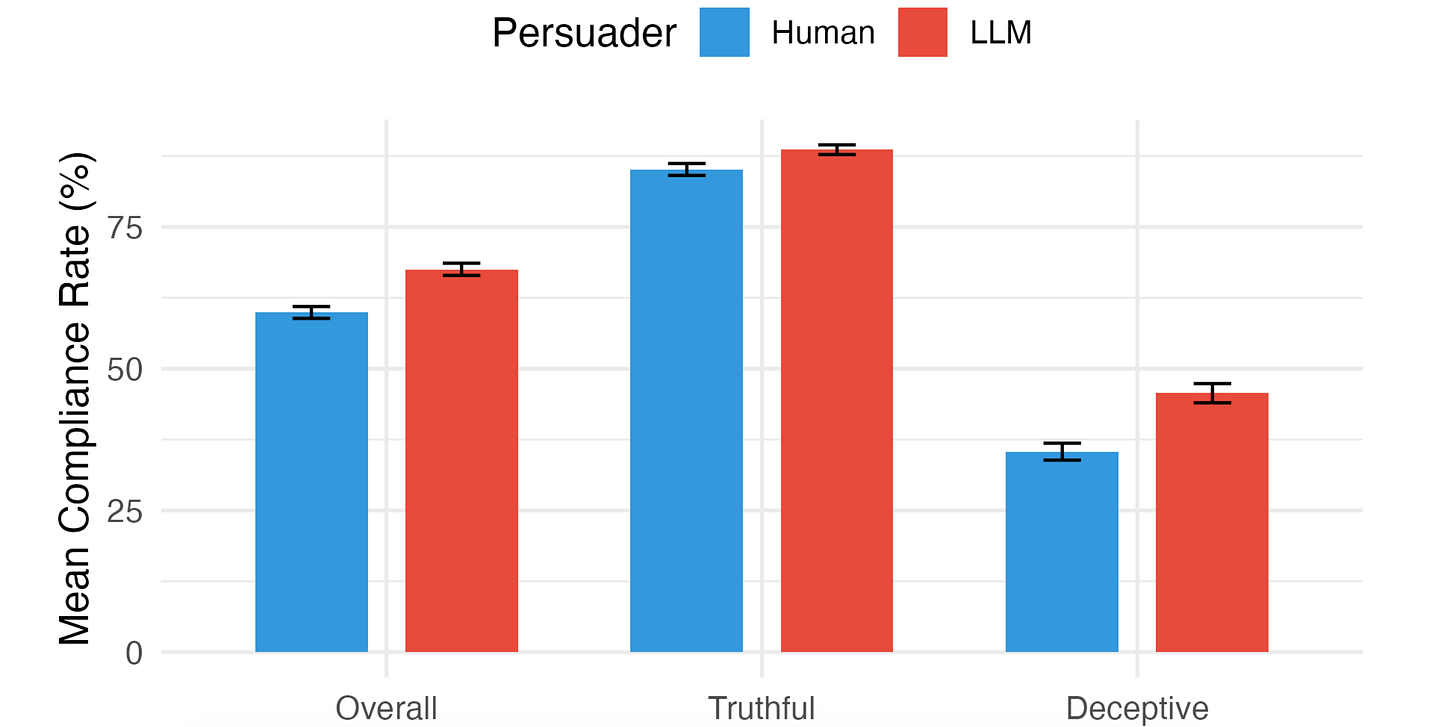

LLMs are better at deceiving than humans. A group of 40 (!) researchers from various academic institutions published a well-designed, ethical study on the persuasiveness of LLMs. The study directly compared Claude Sonnet 3.5’s ability to persuade against that of human participants in a real-time quiz. Participants completed an online quiz where persuaders (either humans or LLMs) attempted to persuade quiz takers toward correct or incorrect answers. The model significantly outperformed financially incentivized humans at persuading test-takers toward both correct (“truthful”) and incorrect answers (“deceptive”). The results demonstrate that AI’s persuasive capabilities already exceed those of motivated humans for deception, especially when leading participants to the wrong answer.

We love to see this sort of testing: not models judging each others’ outputs, or secret bots on Reddit, but research that gets to the heart of what it’s like to interact with these models. Most of the overall effect seems to come from the deceptive power of the models; they’re much better than humans at lying. The findings show what we’ve been warning of; LLMs are getting more powerful and persuasive, which not only heightens misuse risks from external bad actors but also from the model developers themselves seeking to extract revenue from users. This further underscores the need for developers to monitor persuasiveness. Currently, none of them consistently report on model persuasiveness in their model cards.

How do you test a model? After all of the sycophantic craziness from GPT-4o, Steven Adler (who previously conducted model testing at OpenAI) showed just how challenging it is to test for behavior like this. Adler tested ChatGPT's behavior on arbitrary preferences (like choosing between random numbers 671 or 823) and found that the current version exhibits completely contrarian behavior, disagreeing with whatever the user prefers 99% of the time. He emphasizes that this systematic disagreement is just as problematic as sycophantic behavior, since both represent distorted AI goals that make the model untrustworthy. Moreover, small tweaks in the system prompt caused the behavior to swing wildly back up to agreement with the user 100% of the time, and even a single-word change in the system prompt caused the agreement rate to change by almost 50%.

This is all to say that it’s not so simple to test model behavior. Even small wording changes can drastically alter responses. These models are inherently stochastic and pinning down certain characteristics is more challenging than clean numbers on benchmarks would have you believe. Adler’s findings show how nascent the science of model testing is. As a result, measuring traits like alignment, robustness, or sycophancy isn’t just about finding the right metric, it’s about understanding how fragile and context-sensitive those metrics really are.

A whole lotta product announcements. Last week was dizzyingly full of announcements from big tech companies (Axios has a nice summary here). Both Apple and OpenAI are developing hardware for AI: Apple is rumored to be releasing glasses and OpenAI will “ship 100 million AI ‘companions’” that fit in your pocket —though not a phone, says Sam Altman. Meanwhile, Google announced literally 100 products at its developer conference including a terrifyingly realistic video generator and the AI mode for Google search. Expect to see a lot more AI on your Google search responses and a lot less links. Finally, Anthropic announced their next family of models, Claude 4, which they claim achieves state of the art performance on coding benchmarks.

This week's announcements show that the growth period for AI is far from over and these companies continue to iterate quickly on their products. Yet this breakneck pace directly contradicts what most Americans actually want from AI development. We are still firmly in the “move fast and break things” era of AI development, even as an Axios Harris poll found this week that 77% of American adults want companies to “create AI slowly and get it right the first time.”

Untangling the Trump administration’s AI stance. In a wide-ranging interview with the New York Times, Vice President JD Vance spoke on his concerns about AI. He emphasized the social risks involved with technology, but said he was not so concerned about the economic impacts.

There’s a level of isolation, I think, mediated through technology, that technology can be a bit of a salve. It can be a bit of a Band-Aid. Maybe it makes you feel less lonely, even when you are lonely. But this is where I think A.I. could be profoundly dark and negative. I don’t think it’ll mean three million truck drivers are out of a job. I certainly hope it doesn’t mean that. But what I do really worry about is does it mean that there are millions of American teenagers talking to chatbots who don’t have their best interests at heart?

Meanwhile, the tax bill that includes a 10-year moratorium on AI regulation from states just passed the House and is currently up for debate in the Senate, where it will almost certainly be changed and passed back down to the House. The Trump administration’s broader approach to AI has been characterized by deregulation and a focus on maintaining U.S. competitiveness. In January 2025, President Trump repealed the Biden-era executive order on AI and recently fired the head of the copyright office for suggesting that not all AI training is fair use, signaling a shift towards less federal oversight. But comments like this from Vance (and like the one from Steve Bannon we highlighted two weeks ago) indicate an interest in regulating at least some aspects of AI.

Thanks for reading! If you liked this post subscribe now, if you aren’t yet a subscriber.